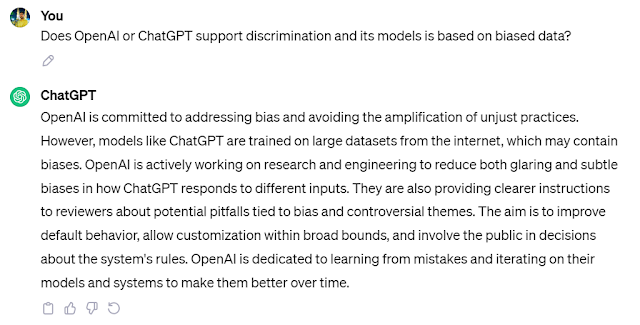

If you've ever chatted with ChatGPT or Claude and noticed they sometimes get basic facts wrong or contradict themselves, you're not imagining things. This is one of the biggest headaches in AI development right now, and it's harder to solve than you might think.

The Memory Problem That's Driving Engineers Crazy

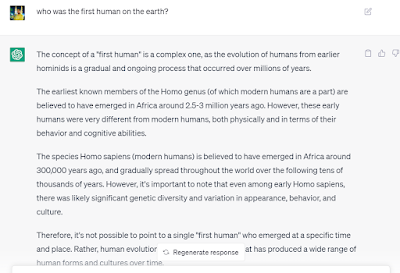

Large language models like GPT-4 or Claude are basically pattern-matching machines on steroids. They've read millions of books, articles, and web pages during training, but here's the weird part – they don't actually "remember" facts the way humans do. Instead, they're incredibly good at predicting what word should come next based on patterns they've seen before.

This creates some bizarre situations. An AI might tell you that Paris is the capital of France in one sentence, then confidently state that London is France's capital two paragraphs later. It's not lying or trying to trick you – it genuinely doesn't have a consistent internal fact-checking system.

Why This Matters More Than Ever

As these models get integrated into search engines, educational tools, and business applications, getting facts right isn't just nice to have – it's essential. Nobody wants their AI assistant confidently telling them the wrong dosage for medication or giving incorrect historical dates for their research paper.

The stakes are particularly high in fields like:

- Healthcare and medical advice

- Financial planning and investment guidance

- Legal research and compliance

- Educational content for students

- News and journalism

The Technical Challenge Behind the Scenes

Here's what makes this problem so tricky to solve. Traditional databases store facts in neat, organized tables where you can easily look up "What is the capital of France?" But language models store information as weights and connections between billions of artificial neurons. There's no single place where the fact "Paris is the capital of France" lives – it's distributed across the entire network.

When the model generates text, it's not consulting a fact database. It's using statistical patterns to predict what sounds right based on its training. Sometimes those patterns align with factual accuracy, sometimes they don't.

Current Solutions and Their Trade-offs

Researchers are attacking this problem from several angles, each with its own pros and cons:

Retrieval-Augmented Generation (RAG) This approach connects the AI model to external databases or search engines. When asked a factual question, the model first looks up relevant information before generating its response. Companies like Microsoft and Google are heavily investing in this approach.

The upside? Much better factual accuracy for recent information. The downside? It's slower, more expensive, and doesn't help with the model's internal consistency.

Knowledge Editing Techniques Some teams are working on ways to directly modify the model's internal representations of facts. Think of it like performing surgery on the AI's "brain" to correct specific pieces of information.

This is promising but incredibly complex. Change one fact and you might accidentally mess up dozens of related concepts the model has learned.

Training on Curated Datasets Another approach focuses on being more selective about training data. Instead of feeding models everything on the internet (including plenty of misinformation), researchers are creating high-quality, fact-checked datasets.

The challenge here is scale. The internet has way more content than any human team could fact-check, but that messy, contradictory data is also what makes models so versatile.

What's Working in Practice

Some of the most promising real-world improvements come from hybrid approaches:

Multi-step Verification Instead of generating answers in one shot, newer systems break down complex questions into steps and verify each piece. This catches more inconsistencies before they reach the user.

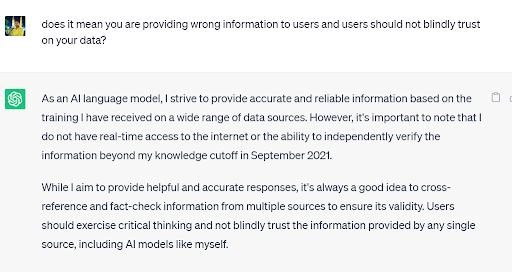

Confidence Scoring Better models are getting trained to express uncertainty. When they're not sure about a fact, they'll say so rather than confidently stating something wrong.

Source Attribution Some systems now cite their sources, making it easier for users to verify information independently.

The Road Ahead

The honest truth? We're still in the early innings of solving this problem. Current AI models are amazing at many tasks, but they're not ready to replace encyclopedias or fact-checkers just yet.

The next few years will likely see significant improvements through:

- Better integration with real-time information sources

- More sophisticated internal fact-checking mechanisms

- Improved training methods that prioritize accuracy over creativity

- Hybrid systems that combine multiple approaches

What This Means for Users Right Now

While researchers work on these challenges, here's how to get the most accurate information from AI models today:

Ask for sources when possible. Many newer models can cite where their information comes from, making verification easier.

Cross-check important facts, especially for medical, legal, or financial advice. AI should supplement human expertise, not replace it.

Be specific in your questions. Vague queries often lead to vague, potentially inaccurate responses.

Pay attention to confidence levels. If a model seems uncertain or gives conflicting information, that's your cue to dig deeper.

The Bigger Picture

Improving factual consistency in AI isn't just a technical challenge – it's about building trust between humans and artificial intelligence. As these systems become more integrated into our daily lives, getting the details right becomes crucial for everything from education to decision-making.

The engineers and researchers working on this problem are tackling one of the fundamental challenges of artificial intelligence: how do you create a system that's both creative and accurate, flexible and reliable?

We're not there yet, but the progress over the past few years has been remarkable. The AI models of 2025 are significantly more factually consistent than those from just two years ago, and that trend shows no signs of slowing down.

The future of AI isn't just about making models smarter, it's about making them more trustworthy. And that's a goal worth working toward.